Anthropic's Privacy Betrayal

Claude users have until September 28 to opt out of AI training as Anthropic abandons its privacy-first promise, extending data retention from 30 days to five years. The consent interface uses manipulative dark patterns while Microsoft launches in-house AI models to challenge OpenAI dependence.

Issue #13 - September 01, 2025 | 5-minute read

👋 Like what you see?

This is our weekly AI newsletter. Subscribe to get fresh insights delivered to your inbox.

INFOLIA AI

Issue #13 • September 01, 2025 • 5 min read

Making AI accessible for everyday builders

The AI productivity paradox: feeling faster while working slower

👋 Hey there!

Anthropic just broke its privacy-first promise: Claude users have until September 28 to opt out of AI training, with data retention jumping from 30 days to five years. The consent interface uses manipulative "dark patterns" with sharing pre-activated. Meanwhile, Microsoft launched in-house AI models and AI security spending surged 40%. Let's dive into what this means.

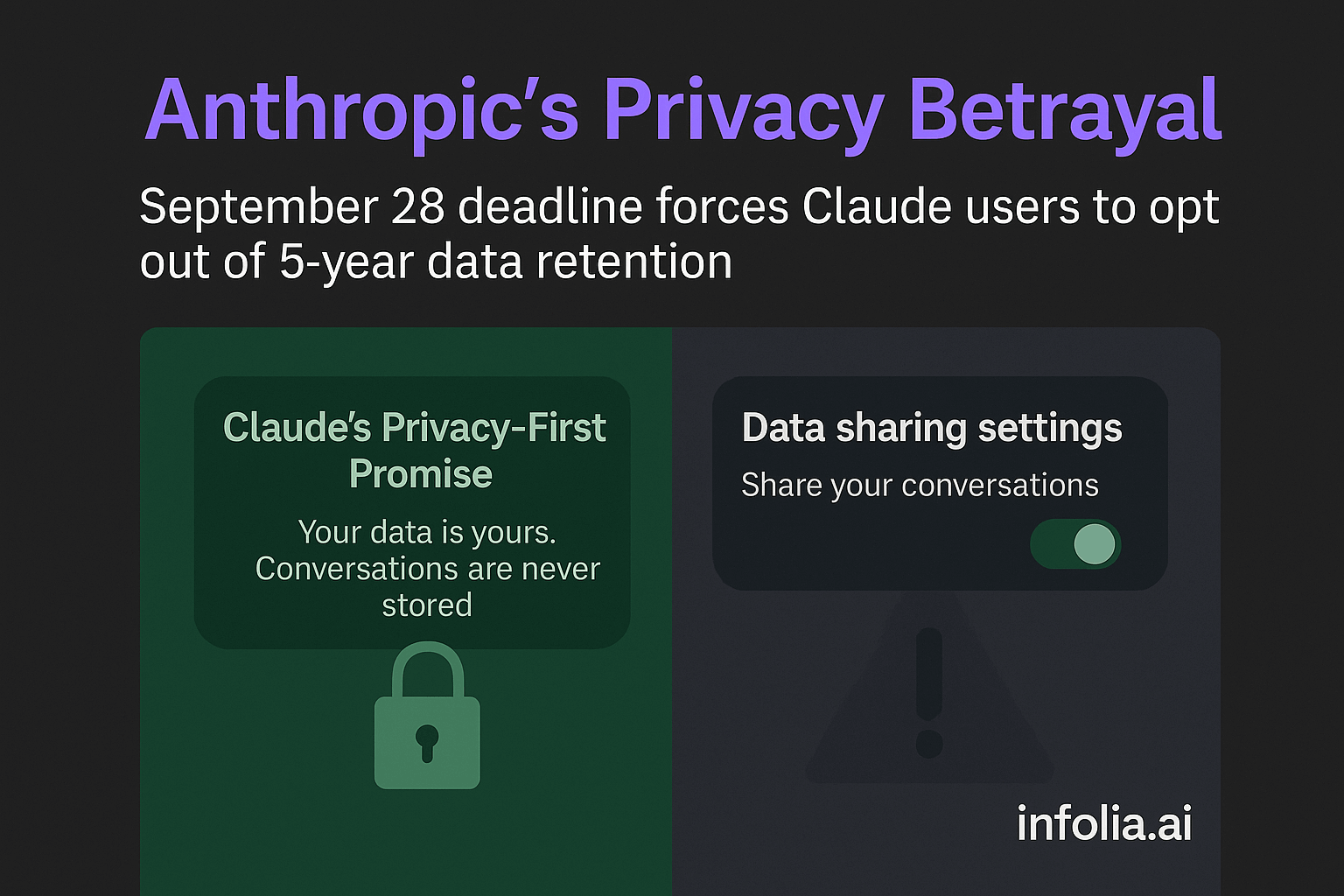

🔒 Anthropic's Privacy Betrayal: How Claude Abandoned Its "Privacy-First" Promise with a September 28 Ultimatum

The assumption everyone believed: Anthropic was the "ethical" AI company that prioritized user privacy over data collection, automatically deleting user conversations after 30 days.

The shocking reality: Anthropic announced that Claude users must decide by September 28, 2025 whether to allow their conversations to be used for AI training, with data retention extended to five years for those who consent. This represents a complete reversal of their previous privacy-first stance where consumer chat data was never used for model training.

The change affects millions of Claude Free, Pro, and Max users, including those using Claude Code. But here's where it gets manipulative: existing users see a pop-up with a prominent black "Accept" button, while the toggle to allow data for AI training is small, pre-activated by default, and easy to miss. Privacy experts are calling this a classic "dark pattern" designed to trick users into sharing their data.

Previously, users were told their prompts and conversation outputs would be automatically deleted from Anthropic's backend within 30 days unless legally required to keep them longer. Now, those who don't actively opt out will have their conversations stored and analyzed for up to five years.

By the numbers:

- September 28, 2025 deadline for Claude users to make their privacy choice

- 5 years of data retention for users who don't opt out (vs. previous 30 days)

- Millions of Claude users affected by the policy change across Free, Pro, and Max plans

Why the sudden change? Like every other large language model company, Anthropic needs data more than it needs people to have fuzzy feelings about its brand. Training AI models requires vast amounts of high-quality conversational data, and accessing millions of Claude interactions should provide exactly the kind of real-world content that can improve Anthropic's competitive positioning against rivals like OpenAI and Google.

The timing is also suspicious. OpenAI is currently fighting a court order that forces the company to retain all consumer ChatGPT conversations indefinitely, including deleted chats, because of a lawsuit filed by The New York Times and other publishers. Industry pressure is forcing all AI companies to change their data practices.

Bottom line: The "ethical AI" company just proved there's no such thing when competitive pressure mounts. If you use Claude, check your settings before September 28 or risk having years of your conversations used to train their competitors.

🛠️ Tool Updates

Microsoft MAI-Voice-1 - Generates a full minute of audio in under a second on single GPU → First in-house model challenging OpenAI dependence

Google Gemma 3 270M - Lightweight open-source model → Edge deployment with minimal compute requirements

OpenAI gpt-realtime - Most capable voice model with mid-sentence tone changes → Enhanced real-time conversational AI

💰 Cost Watch

AI security spending surge: 66.5% of IT leaders have already experienced budget-impacting overages tied to AI or consumption-based pricing. Companies are increasing security spending by 40% to audit AI-generated code and detect AI-powered attacks following Anthropic's recent threat intelligence reports.

💡 Money-saving insight: 53% of subscription-based businesses now offer some form of usage-based pricing, up from just 31% the year prior. Monitor your AI tool usage closely—these consumption-based models can lead to unexpected costs that derail budgets.

🔧 Quick Wins

🔍 Privacy audit: Check your Claude privacy settings at claude.ai/settings/data-privacy-controls before September 28—the default is set to share your data for training.

🎯 Usage monitoring: AI prices will drop 10x annually according to predictions, but we're seeing the opposite in the short term, especially among enterprise vendors. Track consumption-based pricing to avoid budget surprises.

⚡ Security review: Implement AI usage monitoring and anomaly detection before security incidents force expensive emergency audits—proactive security costs 70% less than reactive responses.

🌟 What's Trending

Organizational transformation: Microsoft's AI platform product lead Asha Sharma predicts that AI agents could kill the org chart, with "the org chart starts to become the work chart" as tasks and throughput become more important than hierarchical structure.

In-house AI revolution: Microsoft launched MAI-Voice-1 and MAI-1-preview, potentially freeing Microsoft from its overreliance on OpenAI's technology as the company builds its own AI stack for future products.

AI hype reality check: Gartner identifies AI agents and AI-ready data as the two fastest advancing technologies at the Peak of Inflated Expectations, suggesting the current excitement may outpace actual capabilities.

💬 Are you reconsidering your AI tool choices?

With Anthropic abandoning its privacy promises and Microsoft building alternatives to OpenAI, how are you adapting your AI toolkit? Are you switching providers, implementing stricter data policies, or just accepting the new reality? Hit reply - I read every message and I'm curious about how developers are navigating these rapid changes.

— Pranay, Infolia AI

🚀 Ready to stay ahead of AI trends?

Subscribe to get insights like these delivered to your inbox weekly with the latest developments.