Hey folks,

Hope you are doing good! Last week we covered what neural networks are - Understanding Neural Networks: The tech behind today's AI. Today we are diving deeper into how they actually work inside.

Neural networks get their name because they work like our brain. So let me explain using an analogy of how the human brain learns.

How Our Brain Learns

Imagine a baby seeing an apple for the first time. They don't know what it is. But after seeing it multiple times and tasting it, they learn what an apple is. Their brain recognizes the pattern - the color, the shape, the taste.

This happens because brain cells called neurons get activated. Every time the baby sees an apple, these neurons activate more and more. The brain is learning the pattern of an apple.

Artificial Neural Networks work the same way. They are literally named after the brain because they copy how the brain learns.

The Structure of Neural Networks

An artificial neural network has multiple neurons connected together in layers:

- Input layer (receives data)

- Hidden layers (learns patterns)

- Output layer (gives answer)

Think of it like a relay race. Data runs through the input layer, gets passed through the hidden layers where learning happens, and finally reaches the output layer with an answer.

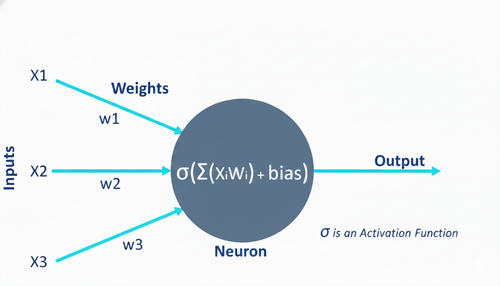

What is a Neuron?

A neuron is the smallest unit in a neural network. It's a function that takes inputs and produces an output between 0 and 1.

Different neurons are responsible for recognizing different patterns. For example:

- Some neurons recognize the red color in an apple

- Some neurons recognize the round shape

- Some neurons recognize the size

When a neuron sees its pattern, it activates (like turning on a light). This activation is called an activation function.

So basically: Each neuron = one activation function

The Layers Explained

Input Layer: Receives raw data (like pixels in an image). The number of neurons here equals the number of input features.

Example: If you're identifying apples from photos, and each photo has 28×28 pixels, you have 784 neurons in the input layer (28 × 28 = 784).

Hidden Layers: These are where the learning happens. They find patterns in the data.

- Can have one or many hidden layers and this count depends on how complex your problem is. For example,

- Recognizing a number? 1-2 hidden layers

- Recognizing a fruit? Maybe 3-4 layers

- Recognizing a person's face? More layers

Output Layer: Gives the final answer. Has one neuron with a value between 0 and 1.

Example: If predicting "apple or not apple", output is 0.95 (95% sure it's an apple).

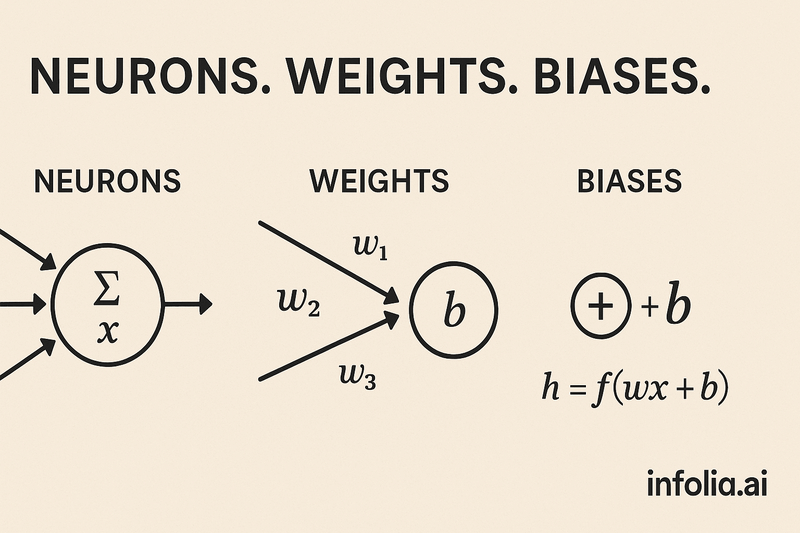

Connections Between Neurons: Weights

The connections between neurons have values called weights.

Think of weights like volume knobs:

- High weight = that input is important (loud signal)

- Low weight = that input is not important (quiet signal)

- Zero weight = ignore this input completely

When data flows from one neuron to the next, the weight decides how much that data matters.

Adjusting the Neuron: Bias

A bias is an extra value we add after multiplying by weights. It helps the neuron make better decisions.

Think of bias like an offset or adjustment:

- Weights multiply the input

- Bias shifts the result

Example: Predicting house prices

- Weight for house size: 1000 (size matters a lot)

- Bias: 50,000 (base price is 50k even for size 0, because land costs money)

The Formula

Every neuron does this calculation:

Output = (Input1 × Weight1) + (Input2 × Weight2) + (Input3 × Weight3) + ... + Bias

Final Output = Activation Function (Output)

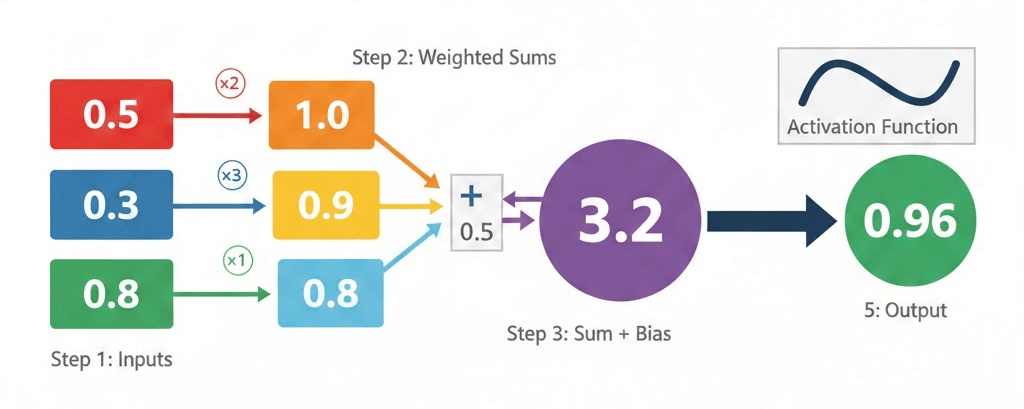

Real example with numbers:

Input: [0.5, 0.3, 0.8]

Weights: [2, 3, 1]

Bias: 0.5

Calculation:

(0.5 × 2) + (0.3 × 3) + (0.8 × 1) + 0.5

= 1.0 + 0.9 + 0.8 + 0.5

= 3.2

After activation function: 0.96 (converted to 0-1 range)

How Do Weights Get Their Values?

Initially: Weights start as random numbers. The network has no idea what the right values should be yet.

During Training: The network makes a prediction, compares it to the actual answer, and sees how wrong it was. Then it adjusts the weights - a little bit at a time to make the next prediction better.

After many training iterations: The weights become "trained weights" that are actually useful. The network learned what values work best for recognizing patterns.

That's the foundation! Neural networks are just neurons in layers, passing data through weights and biases, learning patterns along the way.

📰 This Week in AIOpenAI's AI Persuasion Testing Raises Ethical Concerns

OpenAI revealed it used the subreddit r/ChangeMyView to test the persuasive abilities of its AI reasoning models, collecting Reddit posts and having AI write replies designed to change users' minds.

The controversial part? OpenAI's AI systems rank in the top 80-90th percentile for persuasive argumentation, meaning AI is becoming as convincing as human experts. Stan Ventures This raises questions about how AI could be misused for propaganda or manipulation.

Why it matters for engineers: As AI becomes more persuasive, we need to think about the ethical implications of systems we're building. This isn't just a ChatGPT problem, it's something you should consider in any AI product.

o3-Mini Update Causing Issues: Latest ChatGPT Update Breaking User Workflows

Users on r/OpenAI and r/ChatGPT reported bugs in the latest November update. The progress bar disappeared for reasoning models, and gpt-5-instant keeps asking questions instead of completing tasks. OpenAI Developer Community These aren't minor issues, they're breaking production workflows for developers relying on consistent behavior.

Why it matters for engineers: When you're building on top of APIs, expect breaking changes without much notice. Version your prompts, add fallbacks, and don't trust consistency across updates.

The Data Scraping Wars Continue: Reddit Fights Back

Reddit CEO Steve Huffman criticized Microsoft, Anthropic, and Perplexity for bypassing negotiations on data licensing, calling it "a real pain in the ass" to block unauthorized scrapers.

Meanwhile, OpenAI has a formal Reddit deal, but Google reportedly pays Reddit $60 million annually for similar access, showing how valuable user-generated content is to AI companies.

Why it matters for engineers: If you're training AI models, understand the legal and ethical landscape around data. Free scraping comes with increasing legal liability.

How was today's email?