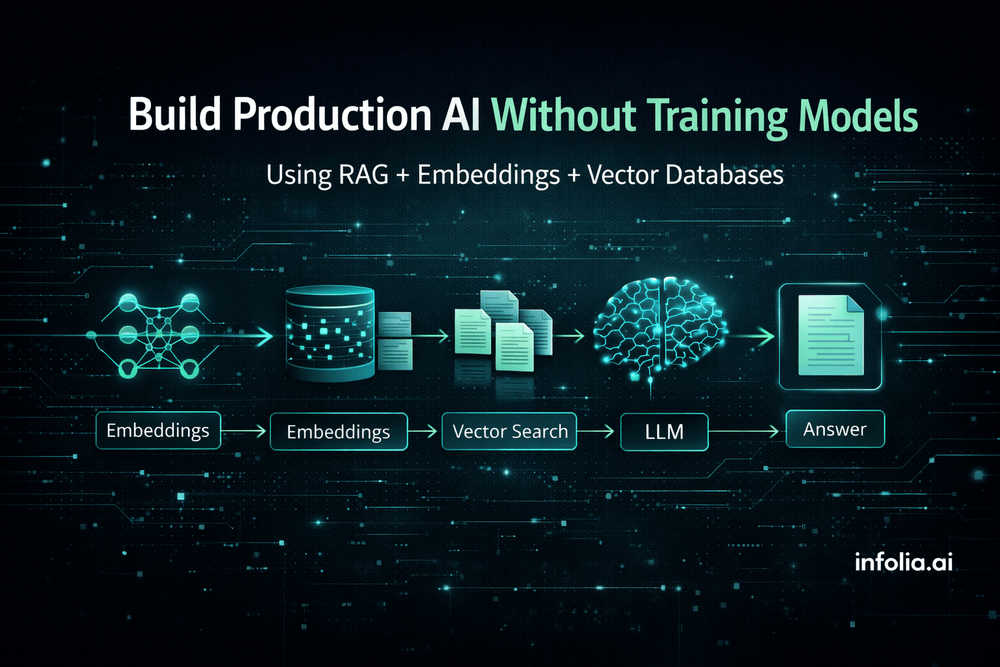

What is RAG? Building Production AI Without Training Models

Production AI without model training: How RAG works with code examples.

Read issue

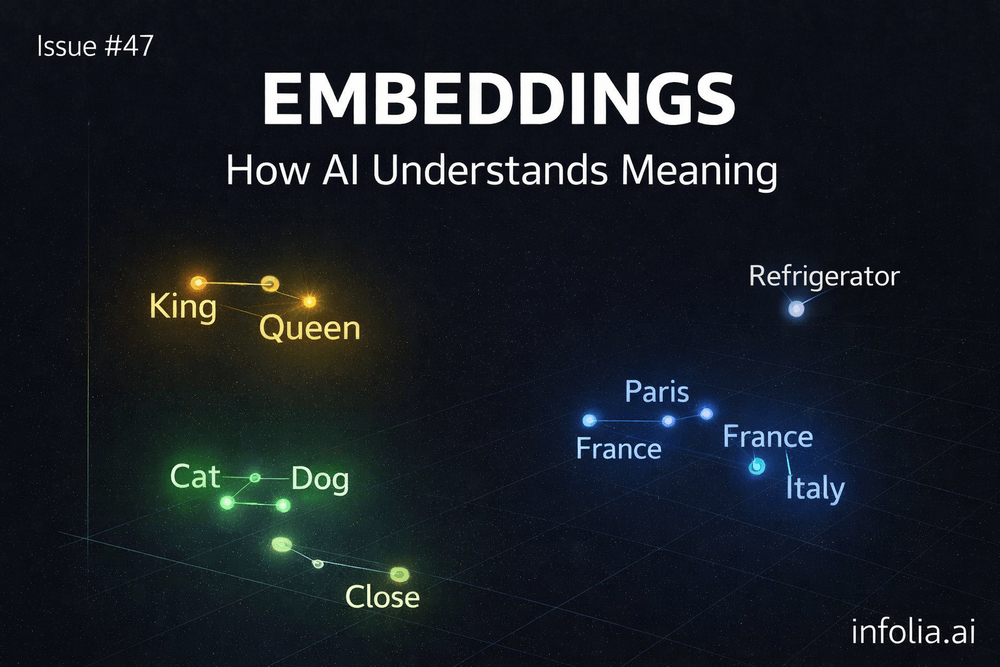

Embeddings & Vector Spaces: How AI Understands Meaning

How AI turns words into meaning using vectors

Read issue

Transformers: The Architecture That Changed Everything

The architecture behind ChatGPT, Claude, and every major AI breakthrough - explained simply.

Read issue

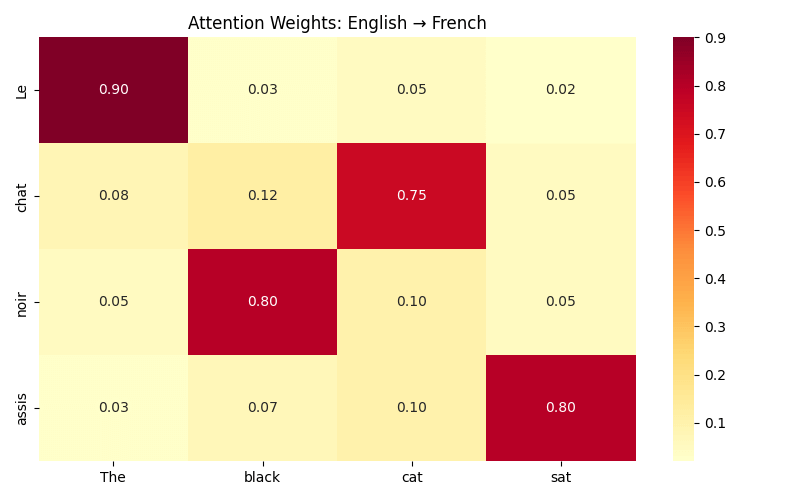

Attention Mechanisms: Teaching Neural Networks Where to Look

Weighted embeddings and why attention replaced RNNs for modern AI.

Read issue

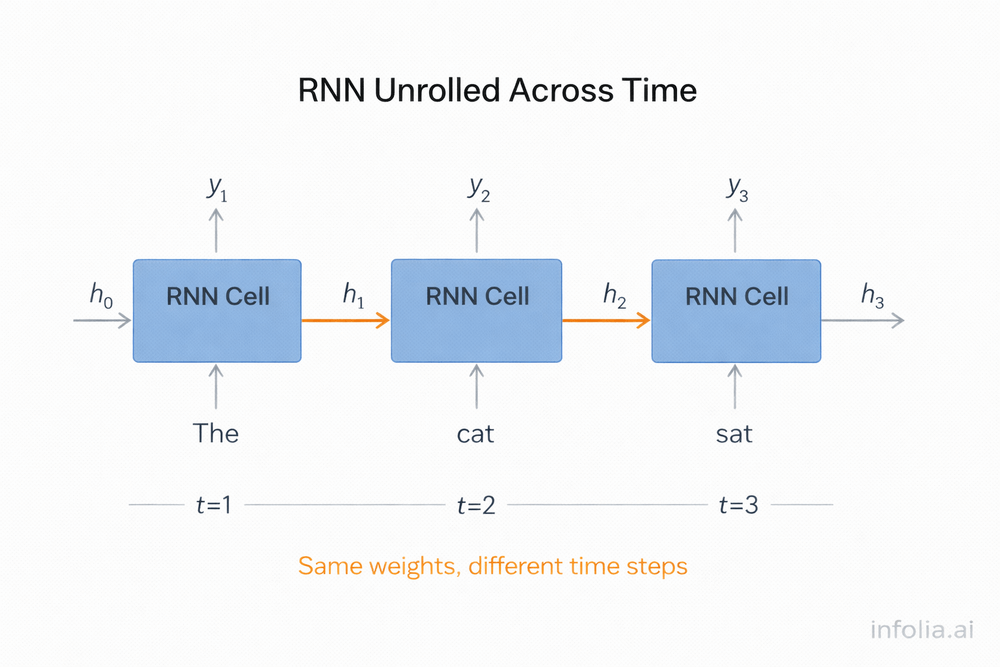

Recurrent Neural Networks: Processing Sequences and Time

How RNNs process sequences through hidden state loops and LSTM gates.

Read issue

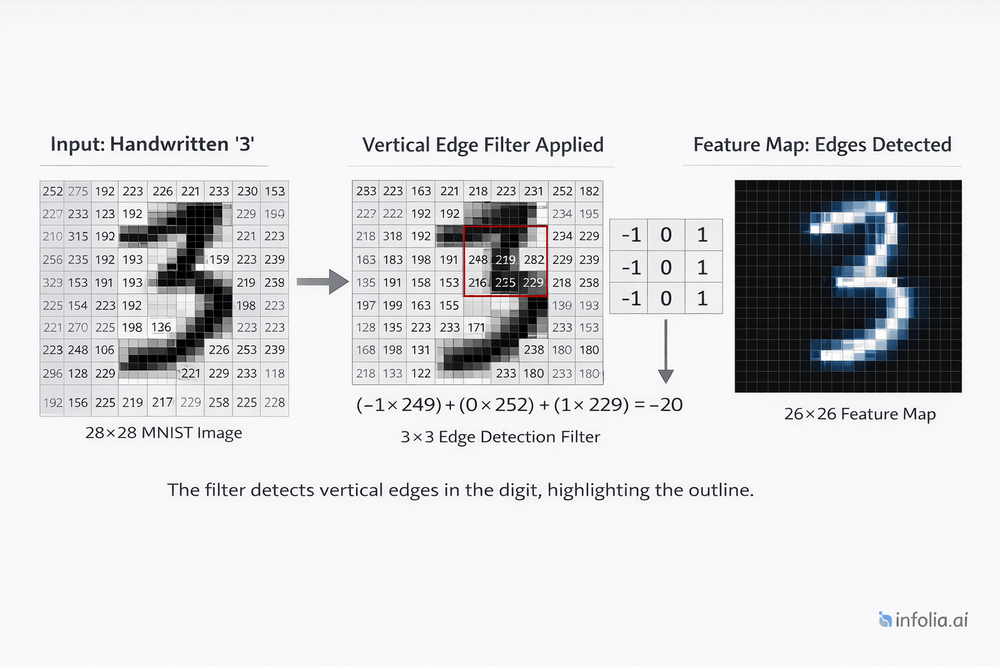

Convolutional Neural Networks: How AI Sees Images

From edge detection to face recognition: How CNNs use sliding filters and parameter sharing to understand images with 1000x fewer parameters.

Read issue

AI in 2026: The 'Show Me the Money' Year

Week 1 of 2026: ROI pressure, quantum bets, transformer plateau, and physical AI

Read issue

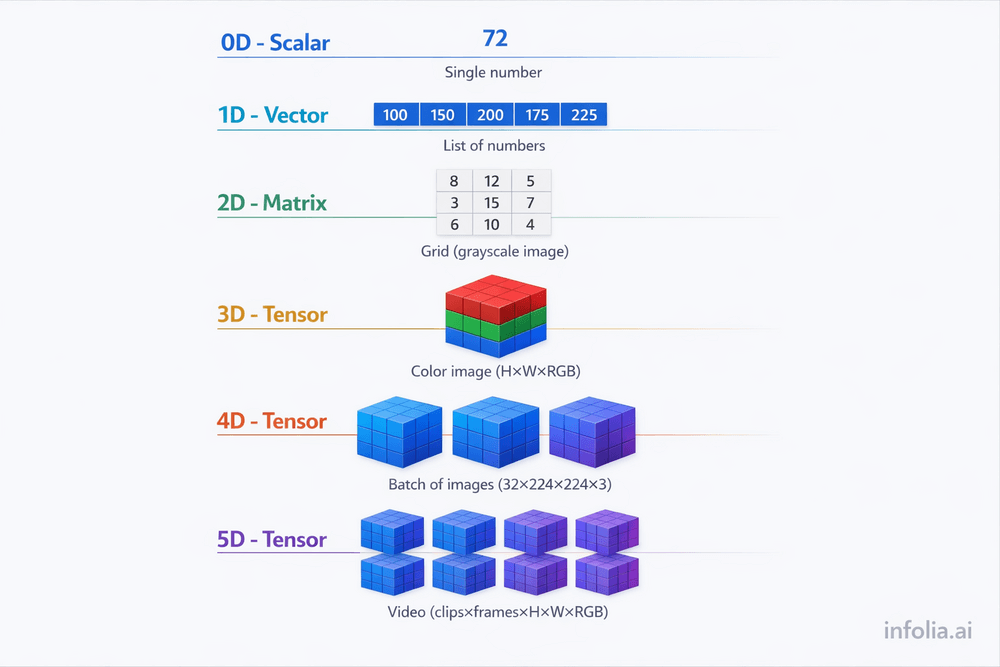

What Are Tensors? (And Why Modern AI Needs Them)

The multi-dimensional data structures that power modern AI architectures.

Read issue

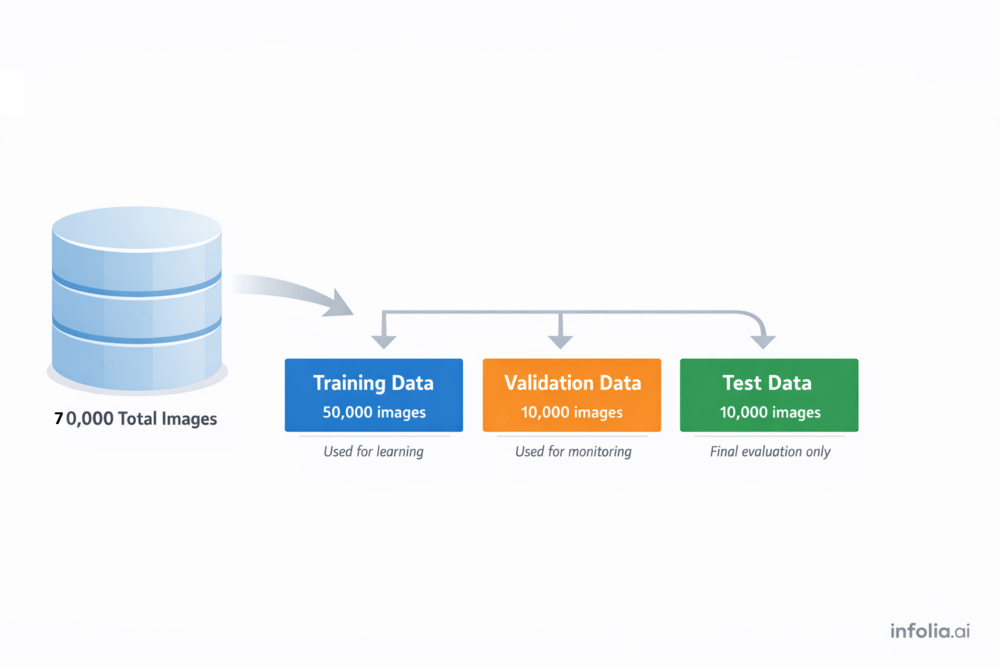

Training Neural Networks: The Complete Learning Loop

The complete 7-step training loop: epochs, batches, overfitting, and when to stop.

Read issue

How Neural Networks Actually Learn (Gradient Descent)

What gradient descent is, how it uses gradients to update weights, and why it's the optimization algorithm that makes neural network learning possible

Read issue

Loss Functions - How Neural Networks Measure Their Mistakes

What loss functions are, why neural networks need them, and how to choose between MSE and cross-entropy for your problem

Read issue

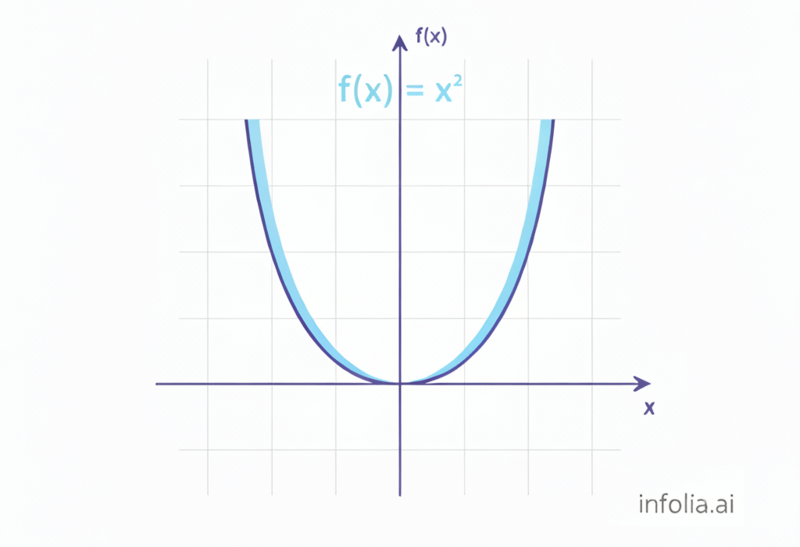

Activation Functions: Why Neural Networks Need Them

Understand why neural networks need activation functions and how ReLU, Sigmoid, and Tanh introduce the non-linearity that makes deep learning work.

Read issue

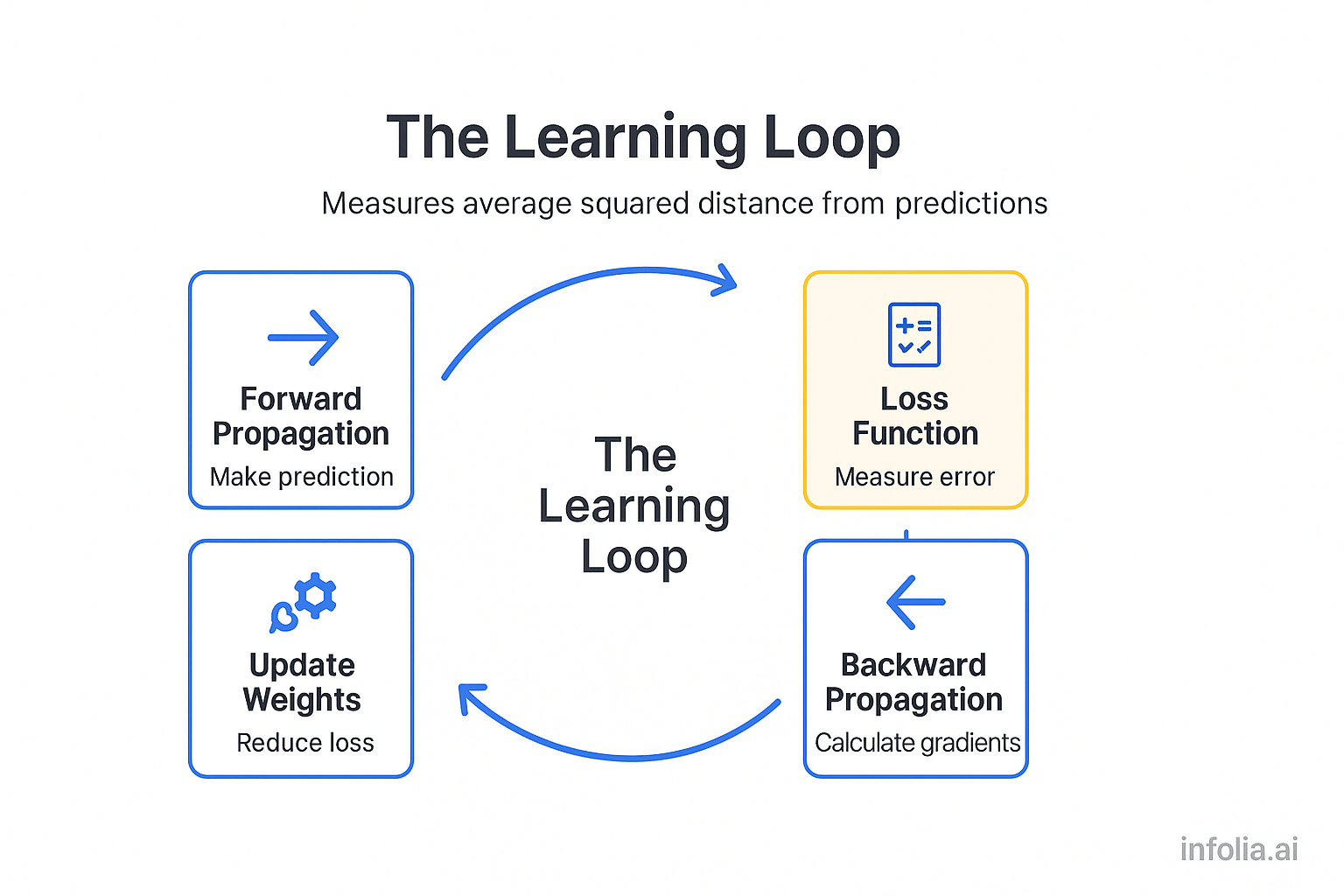

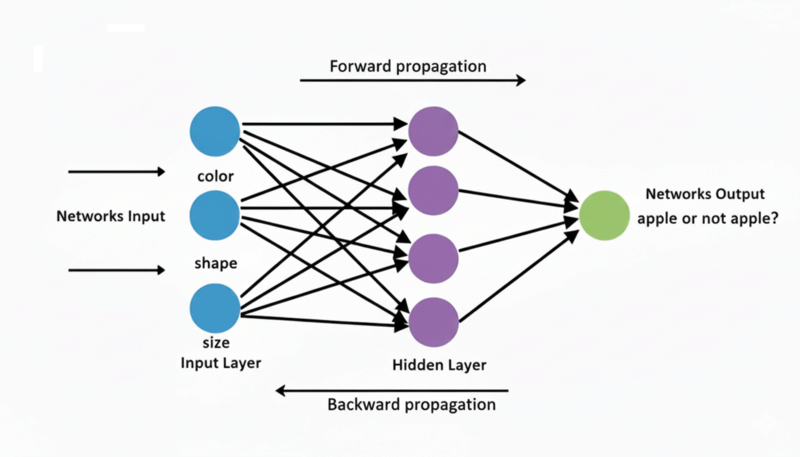

How Neural Networks Learn (Forward & Backward Propagation)

Learn how neural networks learn through forward and backward propagation. Understand how data flows forward, errors are calculated, and weights get adjusted to improve predictions.

Read issue

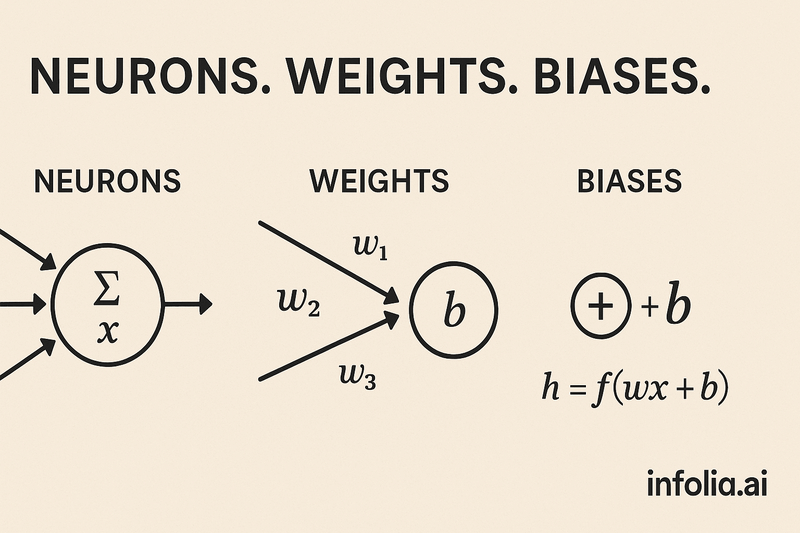

Inside a Neural Network: Neurons, Weights, and Biases Explained

The building blocks of neural networks. How neurons, weights, and biases work together to help AI learn patterns from data.

Read issue