Three days after release, Claude Opus 4.6 is already reshaping how developers think about AI agents. LinkedIn is flooded with claims that native agent capabilities have "killed the wrapper bot era." Custom agent frameworks are supposedly obsolete.

The reality is more nuanced. Opus 4.6 does represent a genuine leap in native capabilities, but the question isn't whether custom code is dead. It's which approach makes sense for your specific use case.

I've spent the past week testing Opus 4.6's agent teams, comparing them against custom implementations in LangChain, CrewAI, and raw API orchestration. Here's what actually matters for production deployments.

What's Actually New in Opus 4.6

Anthropic released Opus 4.6 on February 5, 2026, just three months after Opus 4.5. The release includes several substantive improvements beyond marketing claims.

Core Specifications:

- 200K standard context window (1M token beta available)

- 128K max output tokens (doubled from 64K)

- Pricing unchanged: $5 input / $25 output per million tokens

- Available immediately via API and major cloud platforms

Performance Benchmarks:

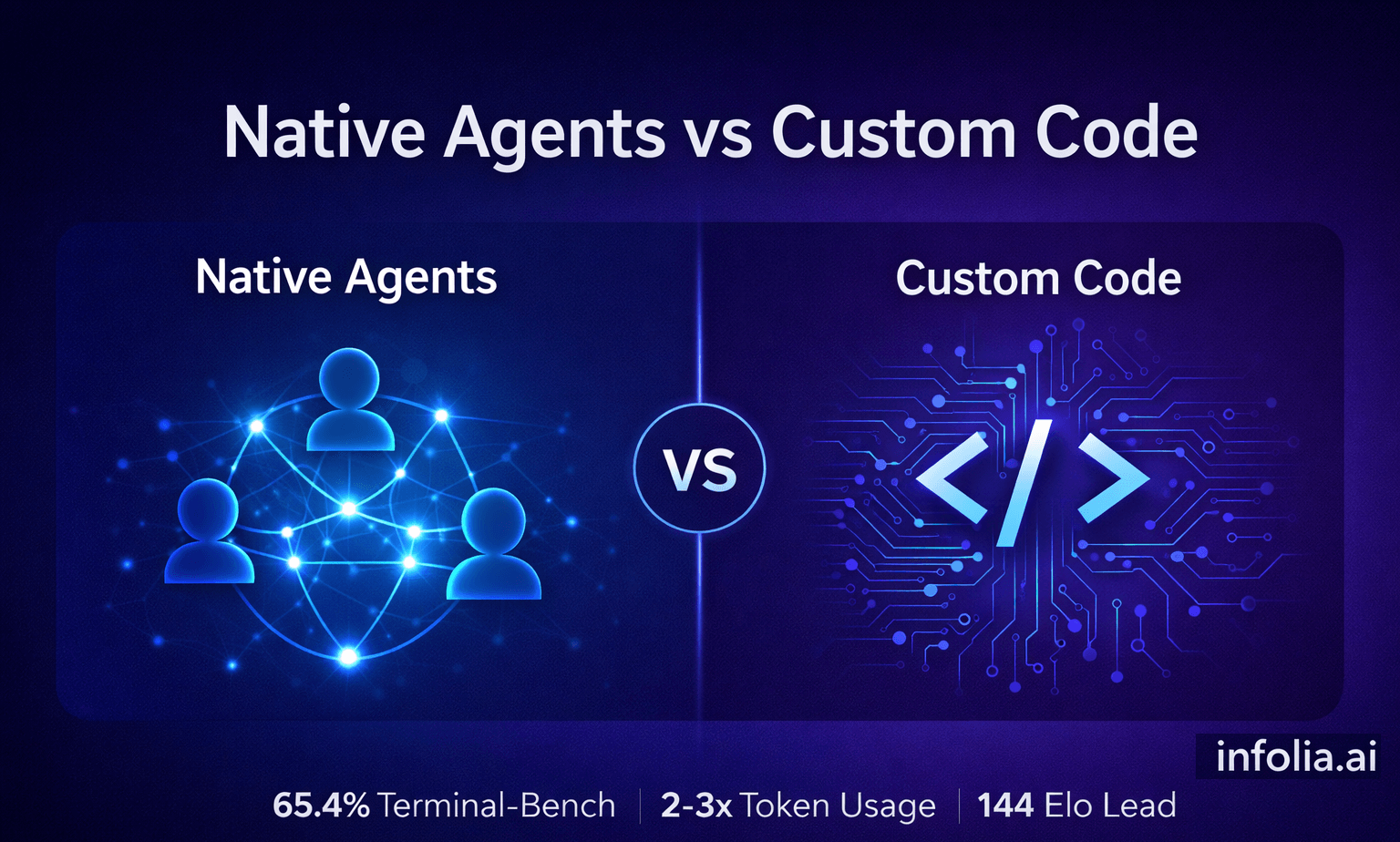

Opus 4.6 achieves 65.4% on Terminal-Bench 2.0, the highest score recorded for agentic coding tasks. On GDPval-AA, which measures real-world professional tasks in finance and legal workflows, it reaches 1606 Elo, a 144-point lead over GPT-5.2.

The model scores 76% on MRCR v2, a needle-in-a-haystack benchmark testing context retrieval. Sonnet 4.5 scores 18.5% on the same benchmark. This represents a qualitative shift in how much context the model can actually use while maintaining peak performance.

Adaptive Thinking:

The most significant change is adaptive thinking, which replaces the previous binary extended thinking toggle. Opus 4.6 now dynamically decides when deeper reasoning helps based on task complexity.

Four effort levels control the tradeoff:

- Low: Quick responses, minimal thinking

- Medium: Balanced approach

- High (default): Deep reasoning for most tasks

- Max: Maximum capability for hardest problems

This granular control matters because Opus 4.6 often thinks more deeply than necessary for simple tasks, adding latency and cost. Setting effort to medium on straightforward requests can reduce inference time by 40% while maintaining quality.

Understanding Native Agent Teams

The flagship feature in Opus 4.6 is agent teams in Claude Code. Instead of one agent working sequentially, you can split work across multiple agents that coordinate directly with each other.

How Agent Teams Work:

Agent teams operate through parallel task execution. One agent handles frontend changes, another tackles backend logic, a third manages tests. Each agent owns its piece and coordinates autonomously.

Scott White, Head of Product at Anthropic, compared this to having a talented team working for you. The segmenting of agent responsibilities allows them "to coordinate in parallel [and work] faster."

The Setup Reality:

Claims that agent teams setup takes "4 minutes" are technically true but misleading. The 4 minutes covers connecting to Claude Code and initializing a team. Actually configuring useful agent workflows requires understanding:

- Task decomposition strategies

- Agent role definition

- Coordination patterns

- Error handling across agents

Production deployments require at least several hours of configuration and testing to get reliable results. Simple demos work quickly. Complex workflows take real engineering effort.

What You Can Actually Build:

Agent teams excel at code review workflows. Multiple agents can read different parts of a large codebase in parallel, share findings, and synthesize a comprehensive review faster than a single sequential agent.

They work well for parallel research tasks. One agent gathers data from APIs, another processes it, a third generates analysis, all working simultaneously.

They struggle with tasks requiring sequential dependencies or complex state management. If Agent B needs Agent A's complete output before starting, parallel execution provides minimal advantage.

Native Agents vs Custom Code: The Real Comparison

The native vs custom debate misses the point. Both approaches serve different needs. Here's where each actually wins.

Setup Time

Native Agents:

- Initial connection: 5-10 minutes

- Basic workflow: 30-60 minutes

- Production-ready configuration: 4-8 hours

Custom Code (LangChain/CrewAI):

- Environment setup: 15-30 minutes

- Basic implementation: 2-4 hours

- Production deployment: 8-20 hours

Native agents win on speed to first result. Custom code requires more upfront investment but provides granular control from the start.

Control and Customization

Native Agents:

You configure through prompts and Claude's built-in interfaces. Want specific error handling? Explain it in natural language. Need custom logging? Limited options.

The constraint is meaningful. Enterprise deployments often require specific audit trails, compliance checks, or integration with internal systems. Native agents handle this through documented APIs, but customization depth is inherently limited.

Custom Code:

You own the entire orchestration layer. Need to inject custom middleware between agent calls? Straightforward. Want to integrate with internal monitoring systems? Full control.

This flexibility matters most for regulated industries. Financial services and healthcare often require audit trails showing exactly how AI systems made decisions. Custom orchestration provides this visibility.

Cost Analysis

Native Agents:

You pay Claude's standard API pricing ($5/$25 per million tokens) plus infrastructure for Claude Code if self-hosting. Most teams use the hosted version, making costs predictable.

The hidden cost is token usage. Agent teams can consume 2-3x more tokens than sequential workflows because agents communicate through the API. A task costing $0.50 with a single agent might cost $1.20 with a three-agent team.

Custom Code:

Infrastructure costs vary significantly. Hosting LangChain/CrewAI workflows on AWS typically runs $200-800/month for moderate usage. Higher volume deployments can reach $2,000-5,000/month.

You also pay for:

- Development time (ongoing maintenance)

- Monitoring infrastructure

- Error tracking and logging

- Version control and deployment pipelines

One developer maintaining a custom agent system costs $120,000-180,000 annually in salary. This overhead only makes sense at sufficient scale.

Production Reliability

Native Agents:

Anthropic handles infrastructure, model updates, and performance optimization. Your agents automatically benefit from model improvements.

The downside is reduced control during outages. When Claude's API experiences issues, your agents stop working. No fallback options exist beyond waiting.

Custom Code:

You manage reliability end-to-end. This means implementing proper error handling, retry logic, fallback models, and monitoring.

The flexibility allows sophisticated resilience strategies. One production system we've tested routes to GPT-4 when Claude hits rate limits, then back to Claude when available. Native agents don't support this pattern.

When Native Wins

Native agents make sense for specific scenarios.

Rapid Prototyping:

Building a proof-of-concept with agent teams takes hours instead of days. For validating ideas or demonstrating capabilities to stakeholders, the speed advantage is substantial.

Standard Workflows:

Code review, document analysis, and research tasks work exceptionally well with native agents. These workflows map cleanly to Claude's built-in capabilities without requiring custom logic.

Small Teams:

Teams without dedicated AI/ML engineers benefit from native agents. The abstraction layer means product engineers can build agentic workflows without understanding orchestration patterns.

Cost Sensitivity at Low Volume:

Below 1 million tokens daily, native agents typically cost less than maintaining custom infrastructure. The break-even point depends on team size and complexity, but generally sits around 1-2 million tokens/day.

When Custom Code Wins

Custom implementations make sense under different conditions.

Complex State Management:

Workflows requiring persistent state across multiple sessions, custom memory patterns, or complex data structures need custom orchestration. Native agents provide limited state management beyond conversation history.

Regulatory Requirements:

Healthcare (HIPAA), finance (SOC 2), and legal workflows often mandate specific audit trails, data handling, and decision transparency. Custom code provides the hooks needed for compliance.

High Volume Deployments:

Above 5 million tokens daily, custom orchestration with optimized batching and caching typically costs less than native agent API calls. Infrastructure overhead becomes negligible compared to API costs.

Multi-Model Strategies:

Production systems often route different task types to different models. Claude for reasoning, GPT-4 for creative tasks, specialized models for domain tasks. Custom code enables this flexibility.

Integration Requirements:

Deep integration with internal systems (custom databases, proprietary APIs, legacy systems) works better with custom code. You control authentication, error handling, and data flow completely.

The Hybrid Approach

Most successful deployments don't choose exclusively. They use both strategically.

One pattern combines native agents for standard tasks with custom orchestration for complex workflows. Code review uses Claude Code directly. Multi-step research pipelines with custom business logic run on LangChain with Claude as the model.

Another approach uses native agents for rapid iteration, then migrates proven workflows to custom code for optimization. Start with Claude Code to validate the workflow. Once proven, rebuild with custom orchestration to reduce costs and improve control.

This hybrid strategy requires careful architecture planning but provides the best of both worlds.

Decision Framework

Choosing between native and custom comes down to specific project requirements.

Choose Native Agents When:

- Building proof-of-concept or validating ideas

- Team lacks dedicated AI engineering resources

- Standard workflows (code review, document analysis)

- Volume under 1-2 million tokens daily

- Speed to market is critical

- Budget is constrained (under $10K/month)

Choose Custom Code When:

- Regulatory requirements demand audit trails

- Complex state management across sessions

- High volume (5M+ tokens daily)

- Multi-model strategy needed

- Deep integration with internal systems

- Team has dedicated AI/ML engineers

Consider Hybrid When:

- Some workflows are standard, others complex

- Testing new capabilities before production deployment

- Different teams have different technical capabilities

- Iterating on agentic patterns before optimization

What This Means for Developers

Opus 4.6 doesn't make custom agent frameworks obsolete. It raises the bar for what native capabilities can handle.

The shift mirrors earlier platform evolution. Native mobile development didn't kill custom web apps. Cloud functions didn't eliminate custom backends. Each approach serves different needs.

Developers should think in terms of abstraction levels. Native agents provide a high-level abstraction suitable for standard tasks. Custom code offers low-level control for specialized requirements.

The practical implication is skill portfolio. Understanding both native capabilities and custom frameworks makes you more effective. Use native agents to prototype quickly. Use custom code to optimize proven workflows.

As native capabilities improve, the threshold for custom code moves higher. Tasks that required custom orchestration last year now work fine with native agents. This trend continues, but custom code won't disappear because production requirements evolve alongside capabilities.

Looking Forward

Opus 4.6 is impressive. The agent teams feature works well for its intended use cases. The adaptive thinking control provides meaningful flexibility.

But production AI systems require more than impressive demos. They need reliability, cost predictability, integration depth, and compliance capabilities. Native agents excel at some of these requirements. Custom code excels at others.

The real advancement in 2026 isn't choosing one approach over another. It's understanding when each makes sense and building systems that leverage both strategically.

For developers building AI products, that's the skill that actually matters.

To build effective AI systems, understanding model architecture provides essential context. I cover transformer fundamentals, embeddings, and attention mechanisms in the Infolia AI tutorial series, designed for developers implementing production AI.

Related Reading: